The last post on Computer Go (about a year back) was a brief description of the game, and why it is very difficult to approach Go in the same way we tackled Chess.

In a 4 page article on IEEE spectrum, Feng – Hsiung Hsu – the architect and the principal designer of the IBM Deep Blue – discusses in some detail about how Go could be solved using brute force and variations of Min-Max search itself. The article does not go into great detail about the principal problem with computer Go – the evaluation of board positions. Also, one statement caught my eye:

On top of that, in 10 years Moore’s law is expected to present us with still another 100-fold speedup.

I was under the impression that Moore’s law had already reached its limit. However, by adding more and better processors, supercomputers, will undoubtedly be able to do more operations in parallel. In this article we take a look at the most successful technique that takes advantage of the massive parallel processing power of today’s supercomputers – Monte Carlo Techniques using UCT – and deal the advancements made to it in a later post.

As a side-note – For a while now, I had felt that these approaches are not the best way to tackle Go, and that systems that mimic the behavior of human pattern recognition and learning mechanisms are the way to go ( no pun intended ). There was recently a small discussion in the Go mailing list about the use of Neural Networks in tackling Go. This one remark by Alvaro Begue, has affected strongly my sentiments on the issue:

People tried to make flying machines by imitating birds for a long time. Planes and helicopters fly, but not like birds do it. Similarly, I believe that whenever we figure out how to make machines that play go well, they will not do it like a brain does it.

The point being made here is that the computing systems that we have today are very different from the wirings in our own brains. We do not have very good brain-like pattern recognition system. What we do have are really fast parallel systems that perform simple operations at an amazing speed. And our aim should be to learn to use that effectively.

Monte Carlo Go – MoGo and CrazyStone

The introduction of Monte Carlo methods was a great boost to Computer Go. Currently, the best Computer Go program is MoGo and it uses Monte Carlo evaluation with the UCT algorithm. MC-Go engines explore the game tree upto a certain depth and then use Monte Carlo simulation to evaluate the leaf positions. The biggest issue in computer Go is that there is no way to evaluate the resulting board position after the Min-max tree has been explored to a certain depth. Board evaluation is relatively easier in Chess. Positions and pieces directly give away the value of the board. There is no such simplicity in Go. Territories may change hands. And patterns formed initially may have complex implications across the board.

In Monte Carlo evaluation, multiple simulations are run to evaluate the board position. A stochastic player plays a number of random games against himself starting from this position; until the end, and the average of all the final scores is taken as an estimate of the board’s value. In some cases, the frequency of wins from the position is determined as the position’s value.

Something that we could improve is the stochastic player. Ideally, to effectively evaluate the board position, the stochastic player should model the opponent as closely as possible. This is understandably very difficult to achieve. Instead it is far more practical to make the stochastic player biased towards picking better moves ( as both players are in reality going to draw from a set of good moves ) instead of picking all moves uniformly randomly.

A naive implementation of Monte Carlo simulation based evaluation function, where every leaf is evaluated by running a constant number of simulations, will naturally waste simulations on poor board positions. Instead, its better distribute the available simulations properly to evaluate more promising moves more. This leads us to the Exploration vs Exploitation problem.

Exploration vs Exploitation problem

An intuitive example of this is the n-armed bandit problem. A gambler wants to maximize his winnings from a slot machine with n arms. Each arm has a different mean winning associated with it. Through repeated plays the gambler is to maximize his winnings by concentrating his plays on the best levers, except he doesn’t know which lever is the best. In the absence of this knowledge, the gambler’s choices are to choose between a greedy action (exploitation), where he selects the lever which has given him the maximum reward so far; or to explore and choose a different lever in the hope that it’ll give a greater reward. In MC-Go, during evaluation, every move is treated as different bandit problem.

Using UCT ( UCB1 applied to Trees ) in MC-Go

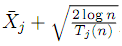

The UCB1 algorithm simply picks the move aj which maximizes this value  , where Xj is the average of all rewards obtained by selecting action aj, Tj is the number of times action aj was picked, and n is the total number of times any action was picked. It would be space-wise expensive if we were to maintain information about each bandit problem and its values. Therefore, both MoGo and CrazyStone do the Monte Carlo simulation in two phases. In the first phase, called Tree Policy UCB1 is followed, and in the other, a default policy ( using the stochastic player discussed earlier ) is used.

, where Xj is the average of all rewards obtained by selecting action aj, Tj is the number of times action aj was picked, and n is the total number of times any action was picked. It would be space-wise expensive if we were to maintain information about each bandit problem and its values. Therefore, both MoGo and CrazyStone do the Monte Carlo simulation in two phases. In the first phase, called Tree Policy UCB1 is followed, and in the other, a default policy ( using the stochastic player discussed earlier ) is used.

Starting from the board position to be evaluated as the root, MoGo selects a move according to UCB1. It then descends down the tree in a similar manner, until the selected node is not a part of the tree. MoGo then adds this node to the tree. The Tree Policy ends here. From here on the default policy using the stochastic player is used. The video shown illustrates the technique.

Resources for further reading

- Homepage of Sylvian Gelly (no longer maintaining MoGo) and MoGo

- CrazyStone and Remi Coulom

- University of Alberta – A lot of Go related people here. David Silver (RLGO, RAVE) , Csaba Szepesvári (UCB) and M. Enzenberger( NeuroGo )

Most of the content here is from the slides of either Sylvian Gelly or Remi Coulom. Following up on the side-note, there are in fact two fairly strong Go programs built on neural networks – NeuroGo and Steenvreter. Lets see if I could read up on them and blog on it sometime. But soon, there’ll be a post on RAVE and hints on RL-Go.